You can expect an explicitly political show this Sunday at the Oscars: at other awards shows this year, virtually every winner—from the most famous actress to the lowliest techie—has let loose with their opposition to Donald Trump. So I figure it won't hurt much if I bring one other element of politics to Hollywood—election handicapping.

Political forecasting sites like Inside Elections give each race a rating on a Solid-Likely-Leans scale, and for the past few years, I've done the same with each Oscar category. The idea is to give casual awards watchers an idea of the conventional wisdom for each of the 24 Academy Award mini-races. Check out this year's race ratings below; for my personal picks (which sometimes diverge from the conventional wisdom!), click here.

Best Picture: Solid La La Land

With a record-tying 14 nominations, this modern movie musical is primed to pull off the rare Oscars-night sweep.

Best Director: Solid Damien Chazelle

La La Land helmer Chazelle won the nearly identical precursor prize, Outstanding Directorial Achievement at the Directors Guild of America Awards, virtually guaranteeing he will be the youngest Best Director winner in history, at 32 years and 38 days.

Best Actor: Tossup

The closest major race of the night pits Casey Affleck of Manchester by the Sea against Denzel Washington's self-directed effort in Fences. Affleck has been the assumed frontrunner all season, but Washington won the Screen Actors Guild Award, and actors represent the largest segment of Academy voters. If Washington wins, he would be the seventh actor to earn three Oscars—and the first African American.

Best Actress: Solid Emma Stone

Stone carries La La Land, a film we know Oscar voters love. Competitor campaigns by Jackie's Natalie Portman and Elle's Isabelle Huppert just haven't gotten off the ground.

Best Supporting Actor: Likely Mahershala Ali

Ali (whose full first name is Mahershalalhashbaz) has had a great year, starring in the Netflix shows House of Cards and Luke Cage and appearing in Best Picture nominees Hidden Figures and Moonlight. This win may be for Moonlight, but really it's for an excellent all-around body of work.

Best Supporting Actress: Solid Viola Davis

After being robbed of the 2011 Best Actress Oscar for The Help, Davis is finally back at the dance for Fences, and no one else stands a chance.

Best Adapted Screenplay: Solid Moonlight

Competitors Lion and Arrival have both won precursor awards in this category, but not while Moonlight was nominated opposite them. The consensus seems to be that Moonlight was Hollywood's second-favorite film this year after La La Land, so it's favored here, but that's more a collective educated guess than a mathematical certainty.

Best Original Screenplay: Leans Manchester by the Sea

This category is an old-fashioned horse race between La La Land and Manchester by the Sea. Manchester's script has drawn plenty of praise, and musicals have historically struggled in the writing categories. But then again, the possibility of a La La Land sweep looms large.

Best Foreign Language Film: Tossup

The German black comedy Toni Erdmann was a solid frontrunner in this category until January 27, 2017, when President Donald Trump signed his now-infamous executive order banning citizens of seven predominantly Muslim countries from entering the U.S. Included in the ban was Iranian director Asghar Farhadi, who subsequently lacerated Trump and said he would not attend the Oscars as a result of the order. Since then, Farhadi's film The Salesman has picked up momentum in this category, to the point where many pundits now are picking it to win on a sympathy vote.

Best Animated Feature: Likely Zootopia

Best Documentary Feature: Solid O.J.: Made in America

Best Cinematography: Solid La La Land

Best Costume Design: Leans Jackie

Best Film Editing: Solid La La Land

Best Makeup and Hairstyling: Likely Star Trek Beyond

Best Production Design: Solid La La Land

Best Original Score: Solid La La Land

Best Original Song: Solid "City of Stars"

Best Sound Editing: Leans Hacksaw Ridge

Best Sound Mixing: Likely La La Land

Best Visual Effects: Solid The Jungle Book

Best Documentary Short: Tossup

Best Live-Action Short: Leans Ennemis Intérieurs

Best Animated Short: Likely Piper

Saturday, February 25, 2017

Race Ratings for the 89th Academy Awards

Sunday, February 19, 2017

The Oscars Really Do Spread the Wealth Around

If it's February, it's time to indulge in hobby-making over a different kind of election here at Baseballot: the Academy Awards. Notoriously difficult to predict due to a lack of hard data and polling, the Oscars often force prognosticators to resort to fickle historical precedents, working theories, and, worst of all, gut instinct.

One of those working theories is that the Academy of Motion Picture Arts and Sciences likes to "spread the wealth around." The idea is that the Academy has many favorite people and movies that it would like to honor, but it only has a limited number of awards to bestow, so it tries to sprinkle them around strategically so that everyone deserving gets a prize for something. At first, it seems a stretch of the imagination to think that a disparate voting bloc could be so coordinated and strategic in its thinking. Academy members aren't making decisions as a unit; their results are an aggregation of thousands of individual opinions. However, the Academy is still a smaller and more homogenous electorate than most, and their preferences may reflect the groupthink of the Hollywood insiders who dominate their ranks. Sure enough, a dive into the data shows that the Academy may indeed lean toward distributing the wealth evenly.

One of this year's hottest Oscar races is that for Best Actor. Will Casey Affleck join the club of Oscar winners for his portrayal of a Boston janitor in Manchester by the Sea, or will Denzel Washington take home his third trophy for hamming it up in Fences? If you believe that the Oscars spread the wealth around, you'd probably lean toward Affleck—and history suggests that's a good bet. At the last 15 ceremonies, a past Oscar winner has gone up against an Oscar virgin 47 times in one of the four acting categories (Best Actor, Best Actress, Best Supporting Actor, and Best Supporting Actress). A full 32% of nominees in those 47 races were past winners, so, all things being equal, past winners should have won at around an equal rate. However, all things clearly were not equal, as those past winners prevailed just seven times out of 47: 15%. Looking at the same data another way, they won just seven times out of their 75 nominations—a 9% success rate. That's far lower than a nominee's default chances in a five-person category: one out of five, or 20%.

Some caution is still warranted, though. Oscar history is littered with famous counterexamples: the time two-time winner Meryl Streep (The Iron Lady) stole Best Actress from underneath the overdue Viola Davis's (The Help) nose at the 2011 ceremony, or when Sean Penn (Milk), a prior winner for Mystic River, edged out Mickey Rourke for The Wrestler at the 2008 awards. In addition, the converse of spreading the wealth isn't necessarily true. Even if the Oscars make a concerted effort to keep extra statuettes out of the hands of people who already have them, they probably don't bend over backward to reward undecorated artists who are "due."

Finally, there may be a difference in wealth-spreading between the acting categories, where the nominees and their records are well known, and the craft categories, where the awards are thought of as going to films, not people. This can explain the wide inequalities in categories like Best Sound Mixing. Sound mixer Scott Millan has won four Oscars in his nine nominations, and legendary sound engineer Fred Hynes won five times in seven tries. By contrast, Greg P. Russell, nominated this year for mixing 13 Hours, has compiled 17 nominations and has never won. And the all-time record for most Oscar nominations without a win goes to mixer Kevin O'Connell, who is a 21-time nominee, including his bid this year for Hacksaw Ridge. Unfortunately, both will almost certainly lose this year to La La Land mixer Andy Nelson, who has two wins in his previous 20 nominations. Even if this isn't because of the longstanding trophy inequality in Best Sound Mixing, it is certainly consistent with it.

Within each ceremony, the Academy also appears to spread the wealth fairly evenly among movies. Of the last 15 Oscars, seven can be considered "spread the wealth" ceremonies, while only three can really be considered "sweeps" (2013 for Gravity, 2008 for Slumdog Millionaire, and 2003 for The Lord of the Rings: The Return of the King); five are ambiguous. Granted, this is a subjective eyeballing of the data, but I have some hard numbers too. The median number of Oscars won by the winningest film of the night over the last 15 years is five—a respectable total, but not one that screams "unstoppable juggernaut." Twelve of the 15 ceremonies saw the winningest film take six Oscars or fewer. Eight of the 15 years were below the average mode of 5.4 Oscars, represented by the black line in the chart below:

More stats: during the same 15-year span, anywhere between nine and 14 feature films (i.e., not shorts) per year could go home and call themselves an Oscar winner—but 10 out of 15 of the years, that number was 12, 13, or 14 films. Just five times was it nine, 10, or 11 films. Finally, at nine of the 15 ceremonies, the standard deviation of the distribution of Oscars among feature films was less than the average standard deviation, again represented by the black line. (A low standard deviation means that the values in a dataset tend to cluster around the mean—so a year when four films each won three awards has a low standard deviation, but a year when one film won 11, two films won two, and the rest won one has a very high standard deviation.)

With only three ceremonies in the past 15 years boasting significantly above-average standard deviations, it's clear that a low standard deviation is the norm—which means it's typical for more films to get fewer Oscars. In other words, spreading the wealth.

Of course, the pattern is only true until it isn't. Every so often, a juggernaut of a movie does come along to sweep the Oscars: think Titanic, which went 11 for 14 at the 1997 ceremony. This year appears poised to deliver us another of these rare occurrences. La La Land reaped a record 14 nominations, and according to prediction site Gold Derby, it is favored to win 10 trophies next Sunday. Don't let overarching historical trends override year-specific considerations, like the runaway popularity of Damien Chazelle's musical, when making your Oscar picks.

One of those working theories is that the Academy of Motion Picture Arts and Sciences likes to "spread the wealth around." The idea is that the Academy has many favorite people and movies that it would like to honor, but it only has a limited number of awards to bestow, so it tries to sprinkle them around strategically so that everyone deserving gets a prize for something. At first, it seems a stretch of the imagination to think that a disparate voting bloc could be so coordinated and strategic in its thinking. Academy members aren't making decisions as a unit; their results are an aggregation of thousands of individual opinions. However, the Academy is still a smaller and more homogenous electorate than most, and their preferences may reflect the groupthink of the Hollywood insiders who dominate their ranks. Sure enough, a dive into the data shows that the Academy may indeed lean toward distributing the wealth evenly.

One of this year's hottest Oscar races is that for Best Actor. Will Casey Affleck join the club of Oscar winners for his portrayal of a Boston janitor in Manchester by the Sea, or will Denzel Washington take home his third trophy for hamming it up in Fences? If you believe that the Oscars spread the wealth around, you'd probably lean toward Affleck—and history suggests that's a good bet. At the last 15 ceremonies, a past Oscar winner has gone up against an Oscar virgin 47 times in one of the four acting categories (Best Actor, Best Actress, Best Supporting Actor, and Best Supporting Actress). A full 32% of nominees in those 47 races were past winners, so, all things being equal, past winners should have won at around an equal rate. However, all things clearly were not equal, as those past winners prevailed just seven times out of 47: 15%. Looking at the same data another way, they won just seven times out of their 75 nominations—a 9% success rate. That's far lower than a nominee's default chances in a five-person category: one out of five, or 20%.

Some caution is still warranted, though. Oscar history is littered with famous counterexamples: the time two-time winner Meryl Streep (The Iron Lady) stole Best Actress from underneath the overdue Viola Davis's (The Help) nose at the 2011 ceremony, or when Sean Penn (Milk), a prior winner for Mystic River, edged out Mickey Rourke for The Wrestler at the 2008 awards. In addition, the converse of spreading the wealth isn't necessarily true. Even if the Oscars make a concerted effort to keep extra statuettes out of the hands of people who already have them, they probably don't bend over backward to reward undecorated artists who are "due."

Finally, there may be a difference in wealth-spreading between the acting categories, where the nominees and their records are well known, and the craft categories, where the awards are thought of as going to films, not people. This can explain the wide inequalities in categories like Best Sound Mixing. Sound mixer Scott Millan has won four Oscars in his nine nominations, and legendary sound engineer Fred Hynes won five times in seven tries. By contrast, Greg P. Russell, nominated this year for mixing 13 Hours, has compiled 17 nominations and has never won. And the all-time record for most Oscar nominations without a win goes to mixer Kevin O'Connell, who is a 21-time nominee, including his bid this year for Hacksaw Ridge. Unfortunately, both will almost certainly lose this year to La La Land mixer Andy Nelson, who has two wins in his previous 20 nominations. Even if this isn't because of the longstanding trophy inequality in Best Sound Mixing, it is certainly consistent with it.

Within each ceremony, the Academy also appears to spread the wealth fairly evenly among movies. Of the last 15 Oscars, seven can be considered "spread the wealth" ceremonies, while only three can really be considered "sweeps" (2013 for Gravity, 2008 for Slumdog Millionaire, and 2003 for The Lord of the Rings: The Return of the King); five are ambiguous. Granted, this is a subjective eyeballing of the data, but I have some hard numbers too. The median number of Oscars won by the winningest film of the night over the last 15 years is five—a respectable total, but not one that screams "unstoppable juggernaut." Twelve of the 15 ceremonies saw the winningest film take six Oscars or fewer. Eight of the 15 years were below the average mode of 5.4 Oscars, represented by the black line in the chart below:

More stats: during the same 15-year span, anywhere between nine and 14 feature films (i.e., not shorts) per year could go home and call themselves an Oscar winner—but 10 out of 15 of the years, that number was 12, 13, or 14 films. Just five times was it nine, 10, or 11 films. Finally, at nine of the 15 ceremonies, the standard deviation of the distribution of Oscars among feature films was less than the average standard deviation, again represented by the black line. (A low standard deviation means that the values in a dataset tend to cluster around the mean—so a year when four films each won three awards has a low standard deviation, but a year when one film won 11, two films won two, and the rest won one has a very high standard deviation.)

With only three ceremonies in the past 15 years boasting significantly above-average standard deviations, it's clear that a low standard deviation is the norm—which means it's typical for more films to get fewer Oscars. In other words, spreading the wealth.

Of course, the pattern is only true until it isn't. Every so often, a juggernaut of a movie does come along to sweep the Oscars: think Titanic, which went 11 for 14 at the 1997 ceremony. This year appears poised to deliver us another of these rare occurrences. La La Land reaped a record 14 nominations, and according to prediction site Gold Derby, it is favored to win 10 trophies next Sunday. Don't let overarching historical trends override year-specific considerations, like the runaway popularity of Damien Chazelle's musical, when making your Oscar picks.

Wednesday, February 15, 2017

After Three Special Elections, What We Do and Don't Know About the 'Trump Effect'

Let's get one thing out of the way—we can't possibly know with any certainty what's going to happen in 2018. The elections are just too far away, too much can change, and right now we're operating with a very small sample of information.

With that said, three partisan special elections have taken place since the January 20 inauguration of President Donald Trump. These races are as hyperlocal as you can get, and they have not drawn much attention or voter interest. But these obscure elections have followed an interesting pattern with respect to Trump.

*Virginia holds legislative elections in odd years, so these numbers are for elections in 2011, 2013, and 2015.

†The regularly scheduled election in Minnesota House District 32B was canceled in 2016 and rescheduled for the special election in February 2017.

Each of the districts shifted dramatically toward Democrats when you compare the results of the 2016 presidential election in the district to its special election results this year. Iowa's House District 89 went from 52–41 Clinton to 72–27 for the Democratic State House candidate. Minnesota's House District 32B went from 60–31 Trump to a narrow 53–47 Republican win. And Virginia's House District 71 went from 85–10 Clinton to 90–0 for the Democratic House of Delegates candidate, although it should probably be ignored because Republicans did not contest the special election. (Thanks to Daily Kos Elections for doing the invaluable work of calculating presidential results by legislative district.)

This could be evidence for the "Trump effect"—Trump's stormy tenure and record unpopularity already poisoning Republican electoral prospects as voters react to what they're seeing in the White House. Clearly, many Trump voters in these districts either didn't show up or changed parties in these special elections. However, there is an alternative explanation.

Trump's uniqueness as a Republican candidate—alienating educated whites and minorities but winning over culturally conservative Democrats—meant that the 2016 map was a departure from the previous several elections, especially in the Midwest. The Iowa and Minnesota districts that held special elections this year are two prime examples of areas that gravitated strongly to Trump. And indeed, both districts were a lot redder in 2016 than they were in 2012, when Obama won Iowa House District 89 63% to 36% and Romney won Minnesota HD-32B by just 55% to 43%.

The 2017 special election results were a lot closer to these 2012 presidential results than the 2016 ones—and even closer to the 2012 State House results (67–32 Democratic in Iowa, 51–49 Republican in Minnesota). So an equally valid hypothesis for the meaning of 2017's results is this: maybe Trump was just a one-time deal. Maybe these districts are simply reverting to their old, usual partisanship.

We can't know for sure yet which hypothesis is correct. So far, we have only run our "experiment" (i.e., special elections) to test our hypotheses in two Trumpward-moving districts, no Clintonward-moving ones (e.g., a wealthy suburban district or a minority-heavy one). Therefore, both hypotheses would predict a lurch (or return) leftward for these districts relative to 2016 presidential results. Indeed, that was the result we saw. However, we will have to wait for a special election in a Clintonward-moving district before we can differentiate between the two possibilities. Georgia's Sixth Congressional District is an excellent example, moving from 61–37 Romney to 48–47 Trump. The special general election for this seat, vacated by new Health and Human Services Secretary Tom Price, is in June.

Of course, it is also possible—even probable—that both hypotheses are partly true. A move from 52–41 Clinton to 72–27 for the Democratic State House candidate in Iowa is probably not entirely reversion to the mean when Obama won the district "just" 63–36 and Democrats captured the seat 67–32 in 2012. But rather than assuming—or fervently hoping—that these election results represent a huge anti-Trump wave building, it's good to remember that there are other possible explanations as well.

With that said, three partisan special elections have taken place since the January 20 inauguration of President Donald Trump. These races are as hyperlocal as you can get, and they have not drawn much attention or voter interest. But these obscure elections have followed an interesting pattern with respect to Trump.

*Virginia holds legislative elections in odd years, so these numbers are for elections in 2011, 2013, and 2015.

†The regularly scheduled election in Minnesota House District 32B was canceled in 2016 and rescheduled for the special election in February 2017.

Each of the districts shifted dramatically toward Democrats when you compare the results of the 2016 presidential election in the district to its special election results this year. Iowa's House District 89 went from 52–41 Clinton to 72–27 for the Democratic State House candidate. Minnesota's House District 32B went from 60–31 Trump to a narrow 53–47 Republican win. And Virginia's House District 71 went from 85–10 Clinton to 90–0 for the Democratic House of Delegates candidate, although it should probably be ignored because Republicans did not contest the special election. (Thanks to Daily Kos Elections for doing the invaluable work of calculating presidential results by legislative district.)

This could be evidence for the "Trump effect"—Trump's stormy tenure and record unpopularity already poisoning Republican electoral prospects as voters react to what they're seeing in the White House. Clearly, many Trump voters in these districts either didn't show up or changed parties in these special elections. However, there is an alternative explanation.

Trump's uniqueness as a Republican candidate—alienating educated whites and minorities but winning over culturally conservative Democrats—meant that the 2016 map was a departure from the previous several elections, especially in the Midwest. The Iowa and Minnesota districts that held special elections this year are two prime examples of areas that gravitated strongly to Trump. And indeed, both districts were a lot redder in 2016 than they were in 2012, when Obama won Iowa House District 89 63% to 36% and Romney won Minnesota HD-32B by just 55% to 43%.

The 2017 special election results were a lot closer to these 2012 presidential results than the 2016 ones—and even closer to the 2012 State House results (67–32 Democratic in Iowa, 51–49 Republican in Minnesota). So an equally valid hypothesis for the meaning of 2017's results is this: maybe Trump was just a one-time deal. Maybe these districts are simply reverting to their old, usual partisanship.

We can't know for sure yet which hypothesis is correct. So far, we have only run our "experiment" (i.e., special elections) to test our hypotheses in two Trumpward-moving districts, no Clintonward-moving ones (e.g., a wealthy suburban district or a minority-heavy one). Therefore, both hypotheses would predict a lurch (or return) leftward for these districts relative to 2016 presidential results. Indeed, that was the result we saw. However, we will have to wait for a special election in a Clintonward-moving district before we can differentiate between the two possibilities. Georgia's Sixth Congressional District is an excellent example, moving from 61–37 Romney to 48–47 Trump. The special general election for this seat, vacated by new Health and Human Services Secretary Tom Price, is in June.

Of course, it is also possible—even probable—that both hypotheses are partly true. A move from 52–41 Clinton to 72–27 for the Democratic State House candidate in Iowa is probably not entirely reversion to the mean when Obama won the district "just" 63–36 and Democrats captured the seat 67–32 in 2012. But rather than assuming—or fervently hoping—that these election results represent a huge anti-Trump wave building, it's good to remember that there are other possible explanations as well.

Thursday, February 9, 2017

Hall of Fame Projections Are Getting Better, But They're Still Not Perfect

You'd think that, after the forecasting debacle that was the 2016 presidential election, I'd have learned my lesson and stopped trying to predict elections. Wrong. As many of you know, I put myself on the line yet again last month when I shared some fearless predictions about how the Baseball Hall of Fame election would turn out. I must have an addiction.

This year marked the fifth year in a row that I developed a model to project Hall of Fame results based on publicly released ballots compiled by Twitter users/national heroes like Ryan Thibodaux—but this was probably the most uncertain year yet. Although I ultimately predicted that four players (Jeff Bagwell, Tim Raines, Iván Rodríguez, and Trevor Hoffman) would be inducted, I knew that Rodríguez, Hoffman, and Vladimir Guerrero were all de facto coin flips. Of course, in the end, BBWAA voters elected only Bagwell, Raines, and Rodríguez, leaving Hoffman and Guerrero to hope that a small boost will push them over the top in 2018. If you had simply taken the numbers on Ryan's BBHOF Tracker at face value, you would have gotten the correct answer that only those three would surpass 75% in 2017.

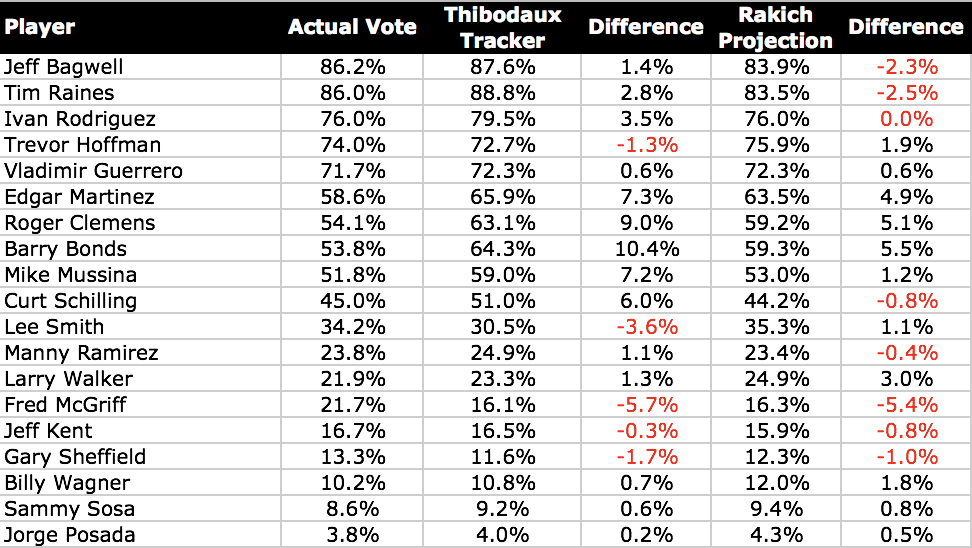

But although my projections weren't perfect, there is still a place for models in the Hall of Fame prediction business. In terms of predicting the exact percentage that each player received, the "smart" model (which is based on the known differences between public and private voters) performed significantly better than the raw data (which, Ryan would want me to point out, are not intended to be a prediction):

My model had an overall average error of 2.1 percentage points and a root mean square error of 2.7 percentage points. Most of this derives from significant misses on four players. I overestimated Edgar Martínez, Barry Bonds, and Roger Clemens all by around five points, failing to anticipate the extreme degree to which private voters would reject them. In fact, Bonds dropped by 23.8 points from public ballots to private ballots, and Clemens dropped by 20.6 points. Both figures are unprecedented: in nine years of Hall of Fame elections for which we have public-ballot data, we had never seen such a steep drop before (the previous record was Raines losing 19.5 points in 2009). Finally, I also underestimated Fred McGriff by 5.4 points. Out of nowhere, the "Crime Dog" became the new cause célèbre for old-school voters, gaining 13.0 points from public to private ballots.

Aside from these four players, however, my projections held up very well. My model's median error was just 1.2 points (its lowest ever), reflecting how it was mostly those few outliers that did me in. I am especially surprised/happy at the accuracy of my projections for the four new players on the ballot (Rodríguez, Guerrero, Manny Ramírez, and Jorge Posada). Because they have no vote history to go off, first-time candidates are always the most difficult to forecast—yet I predicted each of their final percentages within one point.

However, it's easy to make predictions when 56% of the vote is already known. By the time of the announcement, Ryan had already revealed the preferences of 249 of the eventual 442 voters. The true measure of a model lies in how well it predicted the 193 outstanding ones. If you predict Ben Revere will hit 40 home runs in 2017, but you do so in July after he had already hit 20 home runs, you're obviously benefiting from a pretty crucial bit of prior knowledge. It's the same principle here.

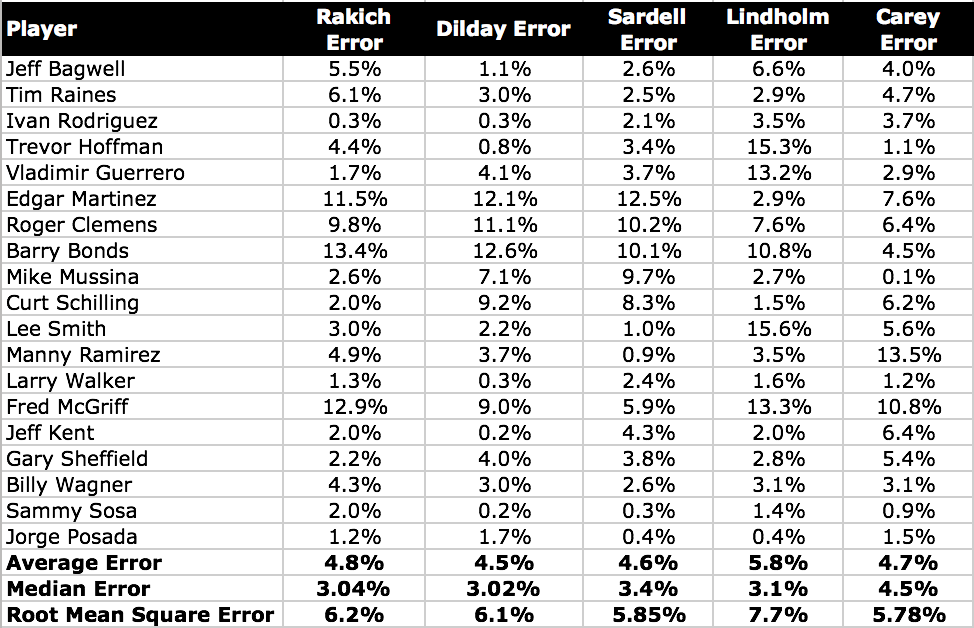

By this measure, my accuracy was obviously worse. I overestimated Bonds's performance with private ballots by 13.4 points, Martínez's by 11.5, and Clemens's by 9.8. I underestimated McGriff's standing on private ballots by 12.9 points. Everyone else was within a reasonable 6.1-point margin.

That was an OK performance, but this year I was outdone by several of my fellow Hall of Fame forecasters. Statheads Ben Dilday and Scott Lindholm have been doing the model thing alongside me for several years now, and this year Jason Sardell joined the fray with a groovy probabilistic model. In addition, Ross Carey is a longtime Hall observer and always issues his own set of qualitatively arrived-at predictions. This year, Ben came out on top with the best predictions of private ballots: the lowest average error (4.5 points), the lowest median error (3.02 points), and the third-lowest root mean square error (6.1 points; Ross had the lowest at 5.78). Ben also came the closest on the most players (six).

(A brief housekeeping note: Jason, Scott, and Ross only published final projections, not specifically their projections for private ballots, so I have assumed in my calculations that everyone shared Ryan's pre-election estimate of 435 total ballots.)

Again, my model performed best when using median as your yardstick; at a median error of 3.04 points, it had the second-lowest median error and darn close to the lowest overall. But I also had the second-highest average error (4.8 points) and root mean square error (6.2 points). Unfortunately, my few misses were big enough to outweigh any successes and hold my model back this year after a more fortuitous 2016. Next year, I'll aim to regain the top spot in this friendly competition!

This year marked the fifth year in a row that I developed a model to project Hall of Fame results based on publicly released ballots compiled by Twitter users/national heroes like Ryan Thibodaux—but this was probably the most uncertain year yet. Although I ultimately predicted that four players (Jeff Bagwell, Tim Raines, Iván Rodríguez, and Trevor Hoffman) would be inducted, I knew that Rodríguez, Hoffman, and Vladimir Guerrero were all de facto coin flips. Of course, in the end, BBWAA voters elected only Bagwell, Raines, and Rodríguez, leaving Hoffman and Guerrero to hope that a small boost will push them over the top in 2018. If you had simply taken the numbers on Ryan's BBHOF Tracker at face value, you would have gotten the correct answer that only those three would surpass 75% in 2017.

But although my projections weren't perfect, there is still a place for models in the Hall of Fame prediction business. In terms of predicting the exact percentage that each player received, the "smart" model (which is based on the known differences between public and private voters) performed significantly better than the raw data (which, Ryan would want me to point out, are not intended to be a prediction):

My model had an overall average error of 2.1 percentage points and a root mean square error of 2.7 percentage points. Most of this derives from significant misses on four players. I overestimated Edgar Martínez, Barry Bonds, and Roger Clemens all by around five points, failing to anticipate the extreme degree to which private voters would reject them. In fact, Bonds dropped by 23.8 points from public ballots to private ballots, and Clemens dropped by 20.6 points. Both figures are unprecedented: in nine years of Hall of Fame elections for which we have public-ballot data, we had never seen such a steep drop before (the previous record was Raines losing 19.5 points in 2009). Finally, I also underestimated Fred McGriff by 5.4 points. Out of nowhere, the "Crime Dog" became the new cause célèbre for old-school voters, gaining 13.0 points from public to private ballots.

Aside from these four players, however, my projections held up very well. My model's median error was just 1.2 points (its lowest ever), reflecting how it was mostly those few outliers that did me in. I am especially surprised/happy at the accuracy of my projections for the four new players on the ballot (Rodríguez, Guerrero, Manny Ramírez, and Jorge Posada). Because they have no vote history to go off, first-time candidates are always the most difficult to forecast—yet I predicted each of their final percentages within one point.

However, it's easy to make predictions when 56% of the vote is already known. By the time of the announcement, Ryan had already revealed the preferences of 249 of the eventual 442 voters. The true measure of a model lies in how well it predicted the 193 outstanding ones. If you predict Ben Revere will hit 40 home runs in 2017, but you do so in July after he had already hit 20 home runs, you're obviously benefiting from a pretty crucial bit of prior knowledge. It's the same principle here.

By this measure, my accuracy was obviously worse. I overestimated Bonds's performance with private ballots by 13.4 points, Martínez's by 11.5, and Clemens's by 9.8. I underestimated McGriff's standing on private ballots by 12.9 points. Everyone else was within a reasonable 6.1-point margin.

That was an OK performance, but this year I was outdone by several of my fellow Hall of Fame forecasters. Statheads Ben Dilday and Scott Lindholm have been doing the model thing alongside me for several years now, and this year Jason Sardell joined the fray with a groovy probabilistic model. In addition, Ross Carey is a longtime Hall observer and always issues his own set of qualitatively arrived-at predictions. This year, Ben came out on top with the best predictions of private ballots: the lowest average error (4.5 points), the lowest median error (3.02 points), and the third-lowest root mean square error (6.1 points; Ross had the lowest at 5.78). Ben also came the closest on the most players (six).

(A brief housekeeping note: Jason, Scott, and Ross only published final projections, not specifically their projections for private ballots, so I have assumed in my calculations that everyone shared Ryan's pre-election estimate of 435 total ballots.)

Again, my model performed best when using median as your yardstick; at a median error of 3.04 points, it had the second-lowest median error and darn close to the lowest overall. But I also had the second-highest average error (4.8 points) and root mean square error (6.2 points). Unfortunately, my few misses were big enough to outweigh any successes and hold my model back this year after a more fortuitous 2016. Next year, I'll aim to regain the top spot in this friendly competition!

Labels:

Accountability,

Baseball,

Hall of Fame,

Number-Crunching,

Predictions

Subscribe to:

Posts (Atom)