With less than a week until this year's record-early Opening Day, I'll be sharing my annual predictions for the upcoming baseball season pretty soon. But if last year's predictions are any indication, you should probably ignore them. (I'm really good at marketing, guys.) After looking back at how my political forecasts fared in 2017, I do the same for baseball below, and... it's not pretty. Come, let's all laugh at my terrible prediction skills together:

(You can read my full predictions for the 2017 American League season here; the 2017 National League season is here.)

Prediction: The AL division winners would be the Red Sox, Indians, and Astros; the Blue Jays and Rays would win the Wild Cards. In the NL, the Nationals, Cubs, and Dodgers would win their divisions, with the Mets and Cardinals nabbing Wild Cards.

What Really Happened: I got all the division winners right—although everyone else did, too (those six teams had it in the bag since virtually Opening Day)—but the Yankees, Twins, Diamondbacks, and Rockies won the Wild Cards. I had projected the Twins and D'backs for 68 and 74 wins, respectively, two of my worst predictions on the year. One of my predicted playoff teams, the Mets, instead lost 92 games. (Amazingly, though, I still nailed every other team in the NL East within two wins.) Overall, I got 13 teams within five games of their eventual win totals, and the average error of my predictions was 7.7 wins.

Prediction: Greg Bird and Aaron Judge would be the modern Mantle and Maris, going back and forth all season as the Yankees team home-run leader.

What Really Happened: This line allows me to take credit for calling Judge's breakout season, right? The towering right fielder obviously came close to winning the AL MVP with his 52 home runs. Bird, though, laid an egg: .190/.288/.422 with just nine homers.

Prediction: José Altuve would captivate America with a 50-game hitting streak.

What Really Happened: Altuve's longest hitting streak of the season was 19 games, but I doubt he was disappointed—he won the AL MVP and got to death-stare President Trump.

Prediction: Giancarlo Stanton would be the first Marlin ever to top 50 home runs, leading the National League.

What Really Happened: Stanton hit 59 homers, best in not only the Senior Circuit, but all of baseball.

Prediction: By the end of the season, Jeb Bush would be the proud new owner of the Marlins.

What Really Happened: Poor Jeb can't win anything, can he? The group headed by Bruce Sherman and Derek Jeter instead won the bidding and bought the team in August.

Prediction: Keon Broxton and Domingo Santana would strike out a combined 300 times, but both would get on base at .350 clips despite .250 batting averages. Broxton would hit 20 homers with 30 steals, and Santana would produce a mirror-image 30/20 season.

What Really Happened: I was pretty close. Broxton hit exactly 20 homers but stole "only" 21 bases. A bigger problem was his average and OBP: .220 and .299, respectively. Santana hit exactly 30 homers and stole "only" 15 bases. He hit .278 and got on base at a .371 clip.

Prediction: Bryce Harper, Adam Eaton, Trea Turner, Anthony Rendon, and Daniel Murphy would each be worth more than 4.0 WAR.

What Really Happened: Three surpassed that threshold, according to FanGraphs: Rendon (6.9), Harper (4.8), and Murphy (4.3). Turner wasn't too far behind at 3.0, but Eaton tore his ACL at the end of April, cutting off his season at 0.5 WAR.

Prediction: The Rockies rotation would be the best in club history.

What Really Happened: They posted 11.8 FanGraphs WAR (fifth in club history) and a 91 ERA− (second in club history). Jon Gray (3.2 WAR and a 73 ERA−) and Germán Márquez (2.4 and 87) led the way.

Prediction: Tigers manager Brad Ausmus would be fired in May. With the team hovering around .500 at the trade deadline, ownership would finally give the OK to blow it all up and rebuild.

What Really Happened: Ausmus held on the whole season, but he was quasi-fired in September when the Tigers announced they wouldn't renew his contract. The Tigers entered the July trade deadline at 47–56 and the August deadline at 58–74; it was at the second one when they finally fire-sold off Justin Upton and Justin Verlander.

Prediction: The San Francisco offense would post its worst offensive season since the 2011 squad's 91 OPS+.

What Really Happened: The 2017 Giants managed just an 83 OPS+, the worst in baseball and the Giants' worst score since 2009.

Prediction: Pablo Sandoval would scream back to relevance with 25 home runs and a positive number of Defensive Runs Saved.

What Really Happened: This is one of those predictions that makes you realize just how long ago March 2017 was. Sandoval hit .212/.269/.354 with the Red Sox, was released in July, returned to the Giants, and basically put up the same slash line for them. He hit nine home runs total with −7 DRS.

Prediction: Every Orioles starting pitcher except Wade Miley would give up more runs in 2017 than in 2016.

What Really Happened: They all did it—including Miley.

Prediction: Robbie Ray would take a huge step forward, shaving more than a run off his ERA and leading the league in strikeouts.

What Really Happened: Ray went from a 4.90 ERA to 2.89. Ray's 218 strikeouts were "only" good for third in the Senior Circuit (Max Scherzer led with 268), but Ray did lead in strikeouts per nine innings (12.11).

Prediction: Every Dodgers starting pitcher would miss at least eight starts as the injury bug plagued Los Angeles.

What Really Happened: Every Dodgers starting pitcher missed at least five starts. Their pitchers lost 1,051 days to the disabled list in total, and the entire roster led MLB with 1,914 days missed.

Prediction: Jean Segura would be a huge bust. Mitch Haniger would turn out to be the more valuable addition from the Taijuan Walker trade, even in the short term.

What Really Happened: Segura went from 5.0 WAR to 2.9 WAR—hardly a bust, and still better than Haniger. However, I was at least right that Haniger would distinguish himself right away: he accumulated 2.5 WAR and wOBA-ed .360.

Prediction: The Mets rotation would be fully healthy and dominant, getting 200 innings out of Noah Syndergaard, a sub-3.00 ERA from Steven Matz, and even a respectable year out of Matt Harvey.

What Really Happened: A wonky elbow held Matz to 13 starts with a 6.08 ERA. A torn lat muscle kept Syndergaard out for five months. And Harvey was lucky not to be non-tendered after his 6.70 ERA performance.

Prediction: Jordan Zimmermann would rue signing with Detroit as he became a pure contact pitcher (setting a career low in strikeout percentage) but the Tigers' league-worst defense failed to convert them into outs.

What Really Happened: Exactly that. Zimmermann's 14.5% strikeout percentage was not only a career low, but it was also fifth-worst among all MLB pitchers with at least 160 innings pitched. As a result of the Tigers' AL-worst −69 DRS, Zimmermann mustered just a 6.08 ERA.

Prediction: Two former Rangers prospects would experience resurgences. Jurickson Profar would win the batting title, and Delino DeShields Jr. would sport a .350 OBP and 30 stolen bases.

What Really Happened: Whoops—Profar hit .172 in only 58 at-bats. But DeShields came eerily close to my projections: his OBP was .347, and he stole 29 bases.

Prediction: Greg Holland wouldn't notch a save all season.

What Really Happened: He led the NL in them with 41.

Prediction: José Berríos and Byron Buxton would finally live up to their potential—which would be good for the Twins, since Brian Dozier would hit just .210 with 10 home runs and nearly 200 strikeouts.

What Really Happened: Berríos went from walking nearly as many as he struck out in 2016 to 14–8 with a 3.89 ERA. Buxton hit a decent .728 OPS but, thanks to stellar defense, amassed 3.5 WAR. Dozier, though, was his usual excellent self, slashing .271/.359/.498 with 141 strikeouts and only mild regression in the homer department (34).

Prediction: Wade Davis would struggle with his control in his recovery from injury, and Kyle Hendricks would regress to league average.

What Really Happened: Davis did indeed walk a career-high 11.6% of batters he faced; I'm nervous for him in 2018. Hendricks regressed from a 2.13 ERA to 3.03, but that was still good for an ERA+ of 144.

Prediction: I called Dansby Swanson winning NL Rookie of the Year "the safest prediction on this page." However, I did expect Cody Bellinger to "force himself into the lineup in mid-siummer."

What Really Happened: Swanson didn't even get Rookie of the Year votes, as he finished with just 0.1 WAR. Bellinger came up on April 25 and didn't look back, collecting 39 home runs and the ROY trophy.

Prediction: The Rangers would have a losing record in one-run games, and they would lead the AL in days spent on the DL.

What Really Happened: Texas did indeed go 13–24 in one-run games, the worst mark in baseball. Their players spent an above-average 1,271 days on the DL, but the Rays led the AL with 1,644.

Prediction: Jason Heyward would bounce back with a .290/.350/.450 slash line, 20 DRS, and a 5.0 WAR. Ben Zobrist, on the other hand, would run into a brick wall. His modest value with the bat would be offset by the worst defensive season of his career.

What Really Happened: Heyward was only marginally better than his disappointing 2016 with the bat (.259/.326/.389), and while he did put up 18 DRS, UZR was much less kind to him. As a result, he had a FanGraphs WAR of just 0.9. Zobrist joined him in the Cubs' trash heap, though not for the reasons I foresaw. He was putrid at the plate (.232/.318/.375) but maintained a (barely) positive defensive value (1 DRS, 1.7 Fielding Runs Above Average).

Prediction: Jorge Soler would lead Royals position players in WAR.

What Really Happened: At −1.0, he was instead dead last.

Prediction: Sonny Gray would continue to be a 75 ERA+ pitcher, Jharel Cotton would pitch 160 innings with a 3.40 ERA, and All-Star Sean Manaea would be the first of the 2017 season to throw a no-hitter.

What Really Happened: Gray returned to form with a 123 ERA+, good enough to be traded to the Yankees. Cotton stunk up the joint to the tune of a 5.58 ERA in 129 innings. Manaea was better (a 4.37 ERA) but no All-Star—although he did throw five no-hit innings in just his third start of the season. (He was removed with the no-hitter intact because he had already thrown 98 pitches.)

Prediction: Jay Bruce would finally win over Mets fans by giving them a .750 OPS, while José Reyes would be banished from Flushing for good by the end of the season.

What Really Happened: Bruce gave Mets fans a .841 OPS, earning a trade to Cleveland, but New York welcomed him back as a free agent in January. Despite being far worse (a .315 OBP), Reyes was allowed to bat 501 times for the Mets, and he too was re-signed in January.

Prediction: The Dodgers would have the NL's stingiest bullpen, followed by the Marlins.

What Really Happened: The Dodgers did rank first in the NL in bullpen ERA (3.38), but the Marlins ranked 10th (4.40).

Prediction: Félix Hernández would post a career-low strikeout rate and flirt with his career-high ERA of 4.52. However, Drew Smyly would make up for it with a 3.20 ERA. James Paxton would finally pitch to his 2.80 FIP.

What Really Happened: Hernández's 21.2% strikeout rate wasn't the lowest of his career, but his ERA did soar to 4.36. Smyly didn't pitch an inning all season, going under the knife in June. Paxton did indeed have that breakout season, posting a 2.98 ERA, but it still didn't catch up to his FIP, which was an outstanding 2.61.

Prediction: David Dahl would surpass outfield-mates Carlos González and Charlie Blackmon in WAR.

What Really Happened: Dahl never played in the majors all year long, so obviously he failed to do so. It would've been easy to beat out González (−0.2 WAR), but Blackmon got MVP votes with his 6.5 score.

Prediction: Steven Souza would finally have that 20/20 breakout season, and Colby Rasmus and Matt Duffy would match their career-high WARs for the Rays.

What Really Happened: Rasmus "stepped away from baseball" halfway through the year, and Duffy never even played in the majors. Souza did break out, but not in quite so balanced a proportion: he hit 30 home runs and stole 16 bases. Both were career highs, and he was traded to the Diamondbacks for his efforts.

Prediction: Jonathan Villar would hit just .240, and his runs scored and stolen bases would both be slashed in half from 2016.

What Really Happened: Villar hit .241. His runs scored went from 92 to 49, and his stolen bases went from 62 to 23.

Prediction: Chris Archer and Blake Snell would be a formidable two-headed monster at the front of the Rays rotation, but the AL Cy Young Award would go to KC's Danny Duffy (a 2.50 ERA and 240 strikeouts).

What Really Happened: Archer actually regressed from 2016 with a 4.07 ERA, and Snell was close behind at 4.04. Duffy was a little better, boasting a 3.81 ERA, but only punched out 130. None of the three received any Cy Young votes.

Prediction: Jameson Taillon's 2.50 ERA would put him squarely in the NL Cy Young conversation. However, Pirates teammate Jung Ho Kang's personal and legal problems would end his major-league career.

What Really Happened: Taillon instead took a huge step back with a 4.44 ERA, although a 3.48 FIP and .352 BABIP suggests he didn't deserve that fate. And so far so bad for Kang.

Prediction: José Bautista would bounce back so convincingly that he would be as valuable as his 2016 self and Edwin Encarnación combined.

What Really Happened: Bautista was awful. His −0.5 WAR was far worse than the 1.4 he accrued in 2016. Encarnación was worth 2.5 WAR in his first season in Cleveland.

Friday, March 23, 2018

What I Didn't Expect in Baseball in 2017

Tuesday, March 13, 2018

What I Didn't Expect in Politics in 2017

It's snowing out and nothing else is really going on, so I'm taking care of some site housekeeping today. Every year, I make a certain number of predictions on these webpages, and every year I try to look back at how I did. This is the first of two posts on that subject—the one that will focus on politics.

Every fall, I issue race ratings, inspired by those at Inside Elections, for every downballot constitutional office up for election. For those types of elections (not so much for politics in general), 2017 was a pretty quiet year: only the Virginia lieutenant governor, Virginia attorney general, and Louisiana treasurer were on the ballot. Here were my ratings for those three races, originally issued in October and kept current (although they never changed) through November 6. They predicted a status quo election, with Democrats holding onto the two offices they already owned, and Republicans successfully defending their one seat.

The small number of races meant I had fewer opportunities to make a bone-headed mistake, and as a result the ratings validated quite nicely.

In a well-calibrated world, the Virginia average of D+6.2 is probably right on the border between Lean Democratic and Likely Democratic. Likewise, the Louisiana treasurer margin of R+11.5 is on the Solid side of Likely Republican. All in all, pretty close, though.

As I mentioned, getting these races right is no great achievement: last year offered a small number of fairly predictable races. The big challenge will be 2018; midterm cycles are the absolute busiest for downballot constitutional offices. My goal this year is to merely handicap all 142 of them before November, let alone get them all right. Wish me luck!

Every fall, I issue race ratings, inspired by those at Inside Elections, for every downballot constitutional office up for election. For those types of elections (not so much for politics in general), 2017 was a pretty quiet year: only the Virginia lieutenant governor, Virginia attorney general, and Louisiana treasurer were on the ballot. Here were my ratings for those three races, originally issued in October and kept current (although they never changed) through November 6. They predicted a status quo election, with Democrats holding onto the two offices they already owned, and Republicans successfully defending their one seat.

The small number of races meant I had fewer opportunities to make a bone-headed mistake, and as a result the ratings validated quite nicely.

- Democrats won two out of two races I rated as Lean Democratic.

- Republicans won the one contest I rated as Solid Republican.

In a well-calibrated world, the Virginia average of D+6.2 is probably right on the border between Lean Democratic and Likely Democratic. Likewise, the Louisiana treasurer margin of R+11.5 is on the Solid side of Likely Republican. All in all, pretty close, though.

As I mentioned, getting these races right is no great achievement: last year offered a small number of fairly predictable races. The big challenge will be 2018; midterm cycles are the absolute busiest for downballot constitutional offices. My goal this year is to merely handicap all 142 of them before November, let alone get them all right. Wish me luck!

Labels:

Accountability,

Constitutional Offices,

Politics,

Predictions,

Ratings

Friday, March 2, 2018

Race Ratings for the 90th Academy Awards

The Oscars are the one election that numbers still can't predict. The black box that is the Academy of Motion Picture Arts and Sciences doesn't lend itself to model-building; instead, we're forced to rely on the sleuthing and scuttlebutt of reporters with their fingers on the pulse of the electorate. I'm sorry to say that the fate of your Oscar pool rests entirely on educated guesses.

In politics, there's a place for those too. My colleagues at Inside Elections and other electoral handicappers issue qualitative predictions on a scale from "Solid Democratic" to "Solid Republican," and it's proven a useful way for politicos to easily think about and group together races of varying competitiveness. In that spirit, for four years running now, I've issued the same type of race ratings for the 24 categories at the Academy Awards. Meant to give the fairweather Oscars fan a quick idea of the state of play, they're the best way to think about who will win the big prizes on Sunday night.

Below are my ratings for the 90th Academy Awards, based on a consensus of betting markets, expert opinions, and award history. For my own personal predictions (which occasionally veer away from conventional wisdom to try to predict the inevitable upset), click here.

Best Picture: Tilt The Shape of Water

The Oscars' use of instant-runoff voting for the top prize has kept the Best Picture winner consistently suspenseful for the last several years—including when it wasn't supposed to be suspenseful at all. This year is no exception, as The Shape of Water and Three Billboards Outside Ebbing, Missouri have both won crucial precursor prizes (the Directors Guild of America Award and the Screen Actors Guild Award, respectively). Both would break Oscar precedent, as The Shape of Water would be only the second film in 25 years to win Best Picture without at least a nomination for SAG's top prize, and Three Billboards would be only the second film in 25 years to win without a Best Director nomination. I give the edge to Shape, since it has more Oscar nominations overall (13), indicating support among all of the Academy's branches, and also snagged the highly predictive Producers Guild of America Award.

Best Director: Solid Guillermo del Toro

The Shape of Water helmer won the all-important DGA Award, while Three Billboards's Martin McDonagh isn't even nominated. Once Del Toro wins, all three of the "Three Amigos"—the Mexican auteurs Alfonso Cuarón, Alejandro González Iñárritu, and Del Toro—will have won the Best Director Oscar this decade.

Best Actor: Solid Gary Oldman

Oldman has been rewarded for his physical transformation into Winston Churchill in Darkest Hour by nearly every awards guild thus far.

Best Actress: Solid Frances McDormand

McDormand's portrayal of a justice-seeking mother in Three Billboards is Oldman's awards-circuit dominance without a Y chromosome. She's a lock as well.

Best Supporting Actor: Solid Sam Rockwell

McDormand's Three Billboards co-star seems like he will easily overcome whisper campaigns about his character's problematic arc to win Oscar gold.

Best Supporting Actress: Solid Allison Janney

There was a time when Janney and Lady Bird's Laurie Metcalf were running neck and neck here, so some might still see this as a competitive category, but since the precursor awards started getting handed out, there's really been no indication that Janney could lose. The only question now is if the I, Tonya star will rap "The Jackal" as part of her acceptance speech.

Best Adapted Screenplay: Solid Call Me by Your Name

This is an historically weak category: three of the nominees weren't good enough to score a nomination anywhere else, and the writing branch had to dig so deep that it nominated a superhero movie (Logan) for the first time in history. The contest here is between Call Me by Your Name and Mudbound; the Best Picture nominee has the clear advantage.

Best Original Screenplay: Tossup

On the other hand, because almost every prestige pic this year was not adapted, the Best Original Screenplay category is packed to the gills with talent. Get Out, the wildly original thriller with something to say about racism, and the snappy dialogue of Three Billboards Outside Ebbing, Missouri are locked in such a tight battle that it's impossible to say who's ahead. Lady Bird could be a dark horse, too.

Best Animated Feature: Solid Coco

Best Documentary Feature: Lean Faces Places

Best Foreign Language Film: Lean A Fantastic Woman

Best Cinematography: Likely Blade Runner 2049

The brilliant Roger Deakins finally looks lined up for his first Oscar after 14 (!) nominations, but beware: the Academy may be consciously averse to his work. He's been a frontrunner in this category at least twice before—only to be upset.

Best Costume Design: Likely Phantom Thread

Best Film Editing: Tilt Dunkirk

Best Makeup and Hairstyling: Solid Darkest Hour

Best Production Design: Likely The Shape of Water

Best Original Score: Likely The Shape of Water

Best Original Song: Tilt "Remember Me"

Best Sound Editing: Likely Dunkirk

Best Sound Mixing: Likely Dunkirk

Best Visual Effects: Tossup

Best Animated Short: Likely Dear Basketball

Best Documentary Short: Lean Edith+Eddie

Best Live-Action Short: Likely DeKalb Elementary

In politics, there's a place for those too. My colleagues at Inside Elections and other electoral handicappers issue qualitative predictions on a scale from "Solid Democratic" to "Solid Republican," and it's proven a useful way for politicos to easily think about and group together races of varying competitiveness. In that spirit, for four years running now, I've issued the same type of race ratings for the 24 categories at the Academy Awards. Meant to give the fairweather Oscars fan a quick idea of the state of play, they're the best way to think about who will win the big prizes on Sunday night.

Below are my ratings for the 90th Academy Awards, based on a consensus of betting markets, expert opinions, and award history. For my own personal predictions (which occasionally veer away from conventional wisdom to try to predict the inevitable upset), click here.

Best Picture: Tilt The Shape of Water

The Oscars' use of instant-runoff voting for the top prize has kept the Best Picture winner consistently suspenseful for the last several years—including when it wasn't supposed to be suspenseful at all. This year is no exception, as The Shape of Water and Three Billboards Outside Ebbing, Missouri have both won crucial precursor prizes (the Directors Guild of America Award and the Screen Actors Guild Award, respectively). Both would break Oscar precedent, as The Shape of Water would be only the second film in 25 years to win Best Picture without at least a nomination for SAG's top prize, and Three Billboards would be only the second film in 25 years to win without a Best Director nomination. I give the edge to Shape, since it has more Oscar nominations overall (13), indicating support among all of the Academy's branches, and also snagged the highly predictive Producers Guild of America Award.

Best Director: Solid Guillermo del Toro

The Shape of Water helmer won the all-important DGA Award, while Three Billboards's Martin McDonagh isn't even nominated. Once Del Toro wins, all three of the "Three Amigos"—the Mexican auteurs Alfonso Cuarón, Alejandro González Iñárritu, and Del Toro—will have won the Best Director Oscar this decade.

Best Actor: Solid Gary Oldman

Oldman has been rewarded for his physical transformation into Winston Churchill in Darkest Hour by nearly every awards guild thus far.

Best Actress: Solid Frances McDormand

McDormand's portrayal of a justice-seeking mother in Three Billboards is Oldman's awards-circuit dominance without a Y chromosome. She's a lock as well.

Best Supporting Actor: Solid Sam Rockwell

McDormand's Three Billboards co-star seems like he will easily overcome whisper campaigns about his character's problematic arc to win Oscar gold.

Best Supporting Actress: Solid Allison Janney

There was a time when Janney and Lady Bird's Laurie Metcalf were running neck and neck here, so some might still see this as a competitive category, but since the precursor awards started getting handed out, there's really been no indication that Janney could lose. The only question now is if the I, Tonya star will rap "The Jackal" as part of her acceptance speech.

Best Adapted Screenplay: Solid Call Me by Your Name

This is an historically weak category: three of the nominees weren't good enough to score a nomination anywhere else, and the writing branch had to dig so deep that it nominated a superhero movie (Logan) for the first time in history. The contest here is between Call Me by Your Name and Mudbound; the Best Picture nominee has the clear advantage.

Best Original Screenplay: Tossup

On the other hand, because almost every prestige pic this year was not adapted, the Best Original Screenplay category is packed to the gills with talent. Get Out, the wildly original thriller with something to say about racism, and the snappy dialogue of Three Billboards Outside Ebbing, Missouri are locked in such a tight battle that it's impossible to say who's ahead. Lady Bird could be a dark horse, too.

Best Animated Feature: Solid Coco

Best Documentary Feature: Lean Faces Places

Best Foreign Language Film: Lean A Fantastic Woman

Best Cinematography: Likely Blade Runner 2049

The brilliant Roger Deakins finally looks lined up for his first Oscar after 14 (!) nominations, but beware: the Academy may be consciously averse to his work. He's been a frontrunner in this category at least twice before—only to be upset.

Best Costume Design: Likely Phantom Thread

Best Film Editing: Tilt Dunkirk

Best Makeup and Hairstyling: Solid Darkest Hour

Best Production Design: Likely The Shape of Water

Best Original Score: Likely The Shape of Water

Best Original Song: Tilt "Remember Me"

Best Sound Editing: Likely Dunkirk

Best Sound Mixing: Likely Dunkirk

Best Visual Effects: Tossup

Best Animated Short: Likely Dear Basketball

Best Documentary Short: Lean Edith+Eddie

Best Live-Action Short: Likely DeKalb Elementary

Wednesday, February 21, 2018

My Model Nailed This Year's Hall of Famers—The Vote Totals, Not So Much

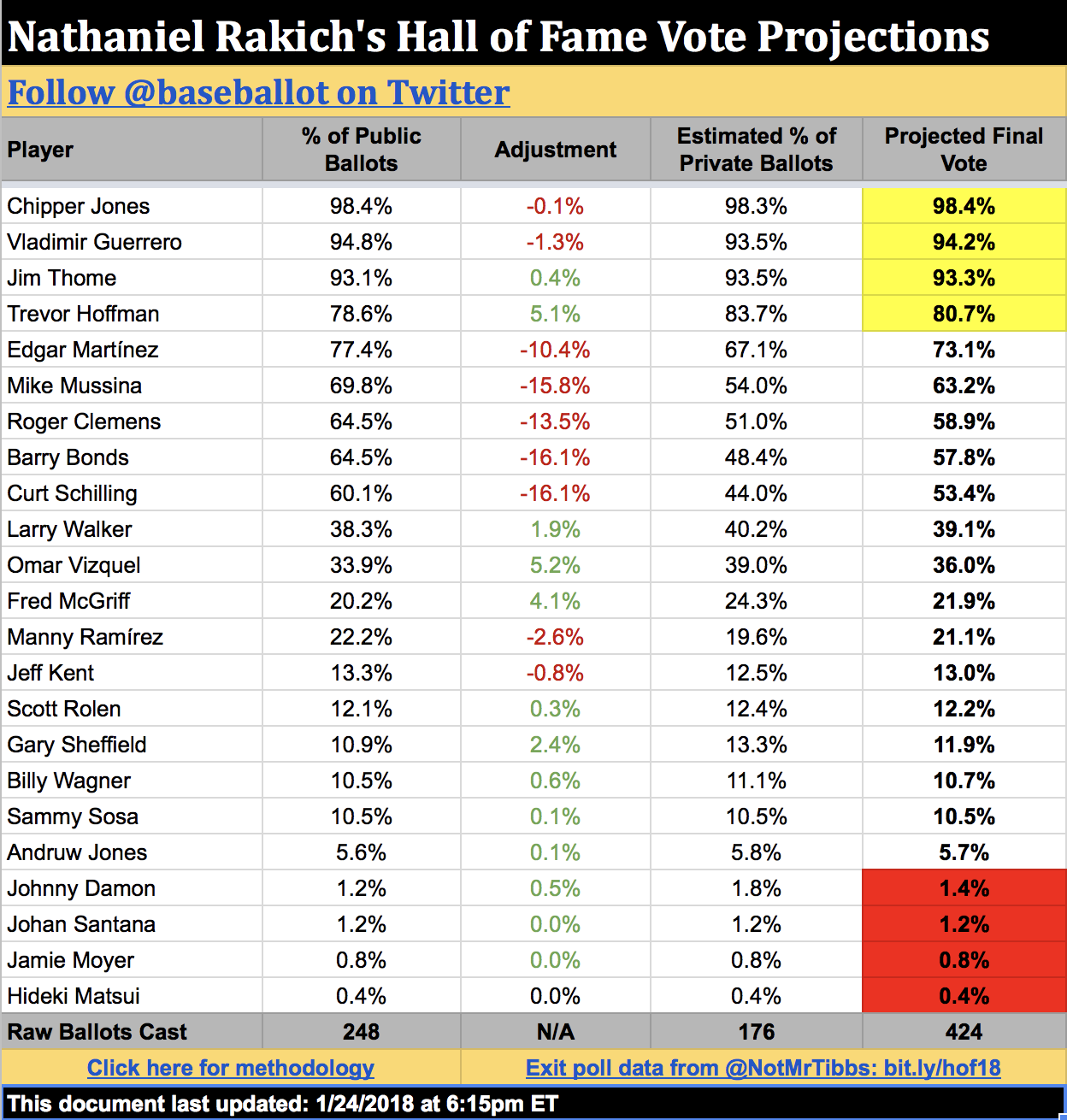

About one month ago, Chipper Jones, Vladimir Guerrero, Jim Thome, and Trevor Hoffman were elected to the Baseball Hall of Fame, which means two things: (1) this Hall of Fame election post mortem is almost one month overdue, and (2) for the first time in three years, my forecasting model correctly predicted the entire Hall of Fame class.

You'd think that would be cause for satisfaction (and I suppose it is better than nothing), but instead I'm pretty disappointed with its performance. The reason is that an election model doesn't really try to peg winners per se; rather, it tries to predict final vote totals—in other words, numbers. And quantitatively, my model had an off year, especially compared to some of my peers in the Hall of Fame forecasting world.

First, a brief rundown of my methodology. My Hall of Fame projections are based on the public ballots dutifully collected and shared with the world by Ryan Thibodaux (and, this year, his team of interns); I extend my gratitude to them again for sacrificing so much of their time doing so. Based on the percentage of public ballots each player is on to date, I calculate his estimated percentage of private (i.e., as yet unshared) votes based on how much those two numbers have differed in past Hall of Fame elections. These "Adjustment Factors"—positive for old-school candidates like Omar Vizquel, negative for steroid users or sabermetric darlings like Roger Clemens—are the demographic weighting to Ryan's raw polling data. And indeed, they produce more accurate results than just taking the Thibodaux Tracker as gospel:

My model's average error was 1.6 percentage points; the raw data was off by an average of three points per player. I didn't have as many big misses this year as last year; my worst performance was on Larry Walker, whom I overestimated by 5.0 points. My model assumed the erstwhile Rockie would gain votes in private balloting, as he had done every year from 2011 to 2016, but 2017 turned out to be the beginning of a trend; Walker did 10.5 points worse on 2018 private ballots than on public ones. I also missed Thome's final vote total by 3.5 points, although I feel better about that one, since first-year candidates are always tricky to predict. Most of my other predictions were pretty close to the mark, including eight players I predicted within a single percentage point. I came within two points of the correct answer for 17 of the 23 players forecasted, giving me a solid median error of 1.3 points. For stat nerds, I also had a root mean square error (RMSE) of 1.9 points.

All three error values (mean, median, and RMS) were the second-best of my now-six-year Hall of Fame forecasting career. But that's misleading: during the past two years, thanks to Ryan's tireless efforts, more votes have been made public in advance of the announcement than ever before. Of course my predictions are better now—there's less I don't know.

Really what we should be measuring is my accuracy at predicting only the 175 ballots that were still private when I issued my final projections just minutes before Jeff Idelson opened the envelope to announce the election winners. Here are the differences between my estimates for those ballots and what they actually ended up saying.

The biggest misses are still with the same players, but the true degree of my error is now made plain. I overshot Walker's private ballots by more than 12 percentage points, and Thome's by more than eight. Those aren't good performances no matter how you slice them. If we're focusing on the positives, I was within four percentage points on 16 of 23 players. My average error was 3.8 points, much better than last year when I had several double-digit misses, but my median error was 3.2 points, not as good as last year.

But where I really fell short was in comparison to other Hall of Fame forecasters: Chris Bodig, who published his first-ever projections this year on his website, Cooperstown Cred; Ross Carey, who hosts the Replacement Level Podcast and is the only one with mostly qualitative predictions; Scott Lindholm, who has been issuing his projections alongside me since day one; and Jason Sardell, who first issued his probabilistic forecast last year. Of them all, it was the rookie who performed the best: Bodig's private-ballot projections had a mean and median error of only 2.2 percentage points. His RMSE also ranked first (2.7 points), followed by Sardell (3.1), Carey (3.9), me (4.6), and Lindholm (6.3). Bodig also came the closest on the most players (10).

Overall, my model performed slightly better this year than it did last year, but that's cold comfort: everyone else improved over last year as well (anecdotally, this year's election felt more predictable than last), so I repeated my standing toward the bottom of the pack. Put simply, that's not good enough. After two years of subpar performances, any good scientist would reevaluate his or her methods, so that's what I'm going to do. Next winter, I'll explore some possible changes to the model in order to make it more accurate. Hopefully, it just needs a small tweak, like calculating Adjustment Factors based on the last two elections rather than the last three (or weighting more recent elections more heavily, a suggestion I've received on Twitter). However, I'm willing to entertain bigger changes too, such as calculating more candidates' vote totals the way I do for first-time candidates, or going more granular to look at exactly which voters are still private and extrapolating from their past votes. Anything in the service of more accuracy!

You'd think that would be cause for satisfaction (and I suppose it is better than nothing), but instead I'm pretty disappointed with its performance. The reason is that an election model doesn't really try to peg winners per se; rather, it tries to predict final vote totals—in other words, numbers. And quantitatively, my model had an off year, especially compared to some of my peers in the Hall of Fame forecasting world.

First, a brief rundown of my methodology. My Hall of Fame projections are based on the public ballots dutifully collected and shared with the world by Ryan Thibodaux (and, this year, his team of interns); I extend my gratitude to them again for sacrificing so much of their time doing so. Based on the percentage of public ballots each player is on to date, I calculate his estimated percentage of private (i.e., as yet unshared) votes based on how much those two numbers have differed in past Hall of Fame elections. These "Adjustment Factors"—positive for old-school candidates like Omar Vizquel, negative for steroid users or sabermetric darlings like Roger Clemens—are the demographic weighting to Ryan's raw polling data. And indeed, they produce more accurate results than just taking the Thibodaux Tracker as gospel:

My model's average error was 1.6 percentage points; the raw data was off by an average of three points per player. I didn't have as many big misses this year as last year; my worst performance was on Larry Walker, whom I overestimated by 5.0 points. My model assumed the erstwhile Rockie would gain votes in private balloting, as he had done every year from 2011 to 2016, but 2017 turned out to be the beginning of a trend; Walker did 10.5 points worse on 2018 private ballots than on public ones. I also missed Thome's final vote total by 3.5 points, although I feel better about that one, since first-year candidates are always tricky to predict. Most of my other predictions were pretty close to the mark, including eight players I predicted within a single percentage point. I came within two points of the correct answer for 17 of the 23 players forecasted, giving me a solid median error of 1.3 points. For stat nerds, I also had a root mean square error (RMSE) of 1.9 points.

All three error values (mean, median, and RMS) were the second-best of my now-six-year Hall of Fame forecasting career. But that's misleading: during the past two years, thanks to Ryan's tireless efforts, more votes have been made public in advance of the announcement than ever before. Of course my predictions are better now—there's less I don't know.

Really what we should be measuring is my accuracy at predicting only the 175 ballots that were still private when I issued my final projections just minutes before Jeff Idelson opened the envelope to announce the election winners. Here are the differences between my estimates for those ballots and what they actually ended up saying.

The biggest misses are still with the same players, but the true degree of my error is now made plain. I overshot Walker's private ballots by more than 12 percentage points, and Thome's by more than eight. Those aren't good performances no matter how you slice them. If we're focusing on the positives, I was within four percentage points on 16 of 23 players. My average error was 3.8 points, much better than last year when I had several double-digit misses, but my median error was 3.2 points, not as good as last year.

But where I really fell short was in comparison to other Hall of Fame forecasters: Chris Bodig, who published his first-ever projections this year on his website, Cooperstown Cred; Ross Carey, who hosts the Replacement Level Podcast and is the only one with mostly qualitative predictions; Scott Lindholm, who has been issuing his projections alongside me since day one; and Jason Sardell, who first issued his probabilistic forecast last year. Of them all, it was the rookie who performed the best: Bodig's private-ballot projections had a mean and median error of only 2.2 percentage points. His RMSE also ranked first (2.7 points), followed by Sardell (3.1), Carey (3.9), me (4.6), and Lindholm (6.3). Bodig also came the closest on the most players (10).

Overall, my model performed slightly better this year than it did last year, but that's cold comfort: everyone else improved over last year as well (anecdotally, this year's election felt more predictable than last), so I repeated my standing toward the bottom of the pack. Put simply, that's not good enough. After two years of subpar performances, any good scientist would reevaluate his or her methods, so that's what I'm going to do. Next winter, I'll explore some possible changes to the model in order to make it more accurate. Hopefully, it just needs a small tweak, like calculating Adjustment Factors based on the last two elections rather than the last three (or weighting more recent elections more heavily, a suggestion I've received on Twitter). However, I'm willing to entertain bigger changes too, such as calculating more candidates' vote totals the way I do for first-time candidates, or going more granular to look at exactly which voters are still private and extrapolating from their past votes. Anything in the service of more accuracy!

Labels:

Accountability,

Baseball,

Hall of Fame,

Number-Crunching,

Predictions

Tuesday, January 23, 2018

Edgar Martínez Is a Coin Flip Away from the Hall of Fame

In early December, I thought we were finally going to get a break. After four consecutive Hall of Fame elections where the outcome was in real doubt, this year looked like a gimme: Chipper Jones, Jim Thome, Vladimir Guerrero, and Trevor Hoffman were going to make the Hall of Fame comfortably; no one else would sniff 75%.

Then Edgar Martínez started polling at 80%. And stayed there. And stayed there. And stayed there.

Thanks to Edgar's steady strength in Ryan Thibodaux's BBHOF Tracker, which aggregates all Hall of Fame ballots made public so far this year, my projection model of the Baseball Hall of Fame election has alternated between forecasting the Mariner great's narrow election and predicting he would barely fall short. Despite the roller coaster of emotion these fluctuations have caused on Twitter, the reality is that my model paints a consistent picture: Martínez's odds are basically 50-50.

My model, which is in its sixth year of predicting the Hall of Fame election, operates on the premise that publicly released ballots differ materially—and consistently—from ballots whose casters choose to keep them private. BBWAA members who share their ballots on Twitter tend to be more willing to vote for PED users, assess candidates using advanced metrics, and use up all 10 spots on their ballot. Private voters—often more grizzled writers who in many cases have stopped covering baseball altogether—prefer "gritty" candidates whose cases rely on traditional metrics like hits, wins, or Gold Glove Awards. As a result, candidates like Barry Bonds, Roger Clemens (PEDs), Mike Mussina (requires advanced stats to appreciate), and Martínez (spent most of his career at DH, a position many baseball purists still pooh-pooh) do substantially worse on private ballots than on public ballots. Candidates like Hoffman (so many saves) and Omar Vizquel (so many Gold Gloves) can be expected to do better on ballots we haven't seen than on the ones we have.

That means the numbers in Thibodaux's Tracker—a.k.a. the public ballots—should be taken seriously but not literally. What my model does is quantify the amount by which each player's vote total in the Tracker should be adjusted. Specifically, I look at the percentage-point difference between each player's performance on public vs. private ballots in the last three Hall of Fame elections (2017, 2016, and 2015). The average of these three numbers (or just two if the player has been on the ballot only since 2016, or just one if he debuted on the ballot last year) is what I call the player's Adjustment Factor. My model simply assumes that the player's public-to-private shift this year will match that average.

Let's take Edgar as an example. In 2017, his private-ballot performance was 16.6 percentage points lower than his public-ballot performance. In 2016, it was 7.7 percentage points lower, and in 2015 it was 6.8 percentage points lower. That averages out to an Adjustment Factor of −10.37 percentage points. As of Monday night, Martínez was polling at 79.23% in the Tracker, so his estimated performance on private ballots is 68.86%.

The final step in my model is to combine the public-ballot performance with the estimated private-ballot performance in the appropriate proportions. In the same example, as of Monday night, 207 of an expected 424 ballots had been made public, or 48.82%. If 48.82% of ballots vote for Martínez at a rate of 79.23%, and the remaining 51.18% vote for Martínez at a rate of 68.86%, that computes to an overall performance of 73.92%—just over one point shy of induction.

But my model is far from infallible. Last year, my private-ballot projections were off by an average of 4.8 percentage points—a decidedly meh performance in the small community of Hall of Fame projection models. (But don't stop reading—historically, my projections have fared much better.) Small, subjective methodological decisions can be enough to affect outcomes in what is truly a mathematical game of inches. For example, why take a straight average of Edgar's last three public-private differentials when they have been growing more and more gaping over time? (Answer: in past years, with other candidates, a straight average has proven more accurate than one that weights recent years more heavily. Historically speaking, Edgar is equally likely to revert to his "usual" modest Adjustment Factor as he is to continue trending in a bad direction.) If there's one thing that studying Hall of Fame elections has taught me, it's that voters will zig when you expect them to zag.

One of my fellow Hall of Fame forecasters, Jason Sardell, wisely communicates the uncertainty inherent in our vocation by providing not only projected vote totals, but also the probability that each candidate will be elected. His model, which uses a totally different methodology based on voter adds and drops, gives Edgar just a 12% chance of induction as of Monday night. I'm not smart enough to assign probabilities to my own model, but as discussed above, it's pretty clear from the way Edgar has seesawed around the required 75% that his shot is no better than a coin flip. Therefore, when the election results are announced this Wednesday at 6pm ET, no matter where Martínez will fall on my model, no outcome should be a surprise.

Below are my current Hall of Fame projections for every candidate on the ballot. They will be updated in real time leading up to the announcement. (UPDATE, January 24: The below are my final projections issued just before the announcement.)

(Still with me? Huzzah. There's one loose methodological end I'd like to tie up for those of you who are interested: how I calculate the vote shares of first-time candidates. This year, that's Chipper Jones, Thome, Vizquel, Scott Rolen, Andruw Jones, Johnny Damon, and Johan Santana.

Without previous vote history to go off, my model does the next best thing for these players: it looks at which other candidate on the ballot correlates most strongly with—or against—them. If New Candidate X shares many of the same public voters with Old Candidate Y, then we can be fairly sure that the two will also drop or rise in tandem among private ballots. For example, Vizquel's support correlates most strongly with opposition to Bonds: as of Monday night, just 21% of known Bonds voters had voted for Vizquel, but 49% of non-Bonds voters had. Holding those numbers steady, I use my model's final prediction of the number of Bonds voters to figure out Vizquel's final percentage as well.

Here are the other ballot rookies' closest matches:

Finally, anyone with one or zero public votes is judged to be a non-serious candidate. Every year, one or two writers casts a misguided ballot for a Tim Wakefield or a Garret Anderson. There's little use in trying to predict these truly random events, so all of these players—including Jamie Moyer this year—have an Adjustment Factor of zero.)

Then Edgar Martínez started polling at 80%. And stayed there. And stayed there. And stayed there.

Thanks to Edgar's steady strength in Ryan Thibodaux's BBHOF Tracker, which aggregates all Hall of Fame ballots made public so far this year, my projection model of the Baseball Hall of Fame election has alternated between forecasting the Mariner great's narrow election and predicting he would barely fall short. Despite the roller coaster of emotion these fluctuations have caused on Twitter, the reality is that my model paints a consistent picture: Martínez's odds are basically 50-50.

My model, which is in its sixth year of predicting the Hall of Fame election, operates on the premise that publicly released ballots differ materially—and consistently—from ballots whose casters choose to keep them private. BBWAA members who share their ballots on Twitter tend to be more willing to vote for PED users, assess candidates using advanced metrics, and use up all 10 spots on their ballot. Private voters—often more grizzled writers who in many cases have stopped covering baseball altogether—prefer "gritty" candidates whose cases rely on traditional metrics like hits, wins, or Gold Glove Awards. As a result, candidates like Barry Bonds, Roger Clemens (PEDs), Mike Mussina (requires advanced stats to appreciate), and Martínez (spent most of his career at DH, a position many baseball purists still pooh-pooh) do substantially worse on private ballots than on public ballots. Candidates like Hoffman (so many saves) and Omar Vizquel (so many Gold Gloves) can be expected to do better on ballots we haven't seen than on the ones we have.

That means the numbers in Thibodaux's Tracker—a.k.a. the public ballots—should be taken seriously but not literally. What my model does is quantify the amount by which each player's vote total in the Tracker should be adjusted. Specifically, I look at the percentage-point difference between each player's performance on public vs. private ballots in the last three Hall of Fame elections (2017, 2016, and 2015). The average of these three numbers (or just two if the player has been on the ballot only since 2016, or just one if he debuted on the ballot last year) is what I call the player's Adjustment Factor. My model simply assumes that the player's public-to-private shift this year will match that average.

Let's take Edgar as an example. In 2017, his private-ballot performance was 16.6 percentage points lower than his public-ballot performance. In 2016, it was 7.7 percentage points lower, and in 2015 it was 6.8 percentage points lower. That averages out to an Adjustment Factor of −10.37 percentage points. As of Monday night, Martínez was polling at 79.23% in the Tracker, so his estimated performance on private ballots is 68.86%.

The final step in my model is to combine the public-ballot performance with the estimated private-ballot performance in the appropriate proportions. In the same example, as of Monday night, 207 of an expected 424 ballots had been made public, or 48.82%. If 48.82% of ballots vote for Martínez at a rate of 79.23%, and the remaining 51.18% vote for Martínez at a rate of 68.86%, that computes to an overall performance of 73.92%—just over one point shy of induction.

But my model is far from infallible. Last year, my private-ballot projections were off by an average of 4.8 percentage points—a decidedly meh performance in the small community of Hall of Fame projection models. (But don't stop reading—historically, my projections have fared much better.) Small, subjective methodological decisions can be enough to affect outcomes in what is truly a mathematical game of inches. For example, why take a straight average of Edgar's last three public-private differentials when they have been growing more and more gaping over time? (Answer: in past years, with other candidates, a straight average has proven more accurate than one that weights recent years more heavily. Historically speaking, Edgar is equally likely to revert to his "usual" modest Adjustment Factor as he is to continue trending in a bad direction.) If there's one thing that studying Hall of Fame elections has taught me, it's that voters will zig when you expect them to zag.

One of my fellow Hall of Fame forecasters, Jason Sardell, wisely communicates the uncertainty inherent in our vocation by providing not only projected vote totals, but also the probability that each candidate will be elected. His model, which uses a totally different methodology based on voter adds and drops, gives Edgar just a 12% chance of induction as of Monday night. I'm not smart enough to assign probabilities to my own model, but as discussed above, it's pretty clear from the way Edgar has seesawed around the required 75% that his shot is no better than a coin flip. Therefore, when the election results are announced this Wednesday at 6pm ET, no matter where Martínez will fall on my model, no outcome should be a surprise.

Below are my current Hall of Fame projections for every candidate on the ballot. They will be updated in real time leading up to the announcement. (UPDATE, January 24: The below are my final projections issued just before the announcement.)

(Still with me? Huzzah. There's one loose methodological end I'd like to tie up for those of you who are interested: how I calculate the vote shares of first-time candidates. This year, that's Chipper Jones, Thome, Vizquel, Scott Rolen, Andruw Jones, Johnny Damon, and Johan Santana.

Without previous vote history to go off, my model does the next best thing for these players: it looks at which other candidate on the ballot correlates most strongly with—or against—them. If New Candidate X shares many of the same public voters with Old Candidate Y, then we can be fairly sure that the two will also drop or rise in tandem among private ballots. For example, Vizquel's support correlates most strongly with opposition to Bonds: as of Monday night, just 21% of known Bonds voters had voted for Vizquel, but 49% of non-Bonds voters had. Holding those numbers steady, I use my model's final prediction of the number of Bonds voters to figure out Vizquel's final percentage as well.

Here are the other ballot rookies' closest matches:

- Chipper voters correlate best with Bonds voters, though not super strongly, with the result that Chipper is expected to lose a little bit of ground on private ballots.

- Thome voters have a strong negative correlation with Manny Ramírez voters, so Thome is expected to gain ground in private balloting.

- Rolen voters correlate well with Larry Walker voters, giving Rolen a slight boost among private voters.

- Andruw voters are negatively correlated with Jeff Kent voters; in fact, no one has voted for both men. This gives Andruw a tiny bump in private balloting.

- It's a very small sample, but public Damon voters and public Bonds voters have zero overlap. Damon gets a decent-sized private-ballot bonus because of that.

- Santana voters are also inclined to vote for Gary Sheffield at high rates, although small-sample caveats apply. Therefore, Santana gets a slight boost in the private projections.

Finally, anyone with one or zero public votes is judged to be a non-serious candidate. Every year, one or two writers casts a misguided ballot for a Tim Wakefield or a Garret Anderson. There's little use in trying to predict these truly random events, so all of these players—including Jamie Moyer this year—have an Adjustment Factor of zero.)

Labels:

Baseball,

Hall of Fame,

Number-Crunching,

Predictions

Wednesday, January 3, 2018

State of the State Schedule 2018

Got any New Year's resolutions? Fifty governors do too. This is the time of the year when each state's chief executive gives his or her State of the State address, setting forth an ambitious (usually too ambitious) agenda for the year ahead. As we head into an election year that could usher in massive changes to state government, it's worth paying attention to the issues that those elections will be fought around. To that end, as Baseballot has provided every dang year since 2013, here is a full schedule of 2018's State of the State speeches. As each date passes, links will be added to the transcript of each speech; dates will also be updated as new orations are announced.

Alabama: January 9 at 6:30pm CT

Alaska: January 18 at 7pm AKT

Arizona: January 8 at 2pm MT

Arkansas: No speech in even-numbered years

California: January 25 at 10am PT

Colorado: January 11 at 11am MT

Connecticut: February 7 at noon ET

Delaware: January 18 at 2pm ET (State of the State); January 25 at 1pm ET (budget address)

Florida: January 9 at 11am ET

Georgia: January 11 at 11am ET

Hawaii: January 22 at 10am HAT

Idaho: January 8 at 1pm MT

Illinois: January 31 at noon CT (State of the State); February 14 at noon CT (budget address)

Indiana: January 9 at 7pm ET

Iowa: January 9 at 10am CT

Kansas: January 9 at 5pm CT (Brownback State of the State); January 31 at 3pm CT (Colyer inaugural); February 7 at 3pm CT (Colyer joint address)

Kentucky: January 16 at 7pm ET

Louisiana: February 19 at 5pm CT (special-session address); March 12 at 1pm CT (State of the State)

Maine: February 13 at 7pm ET

Maryland: January 31 at noon ET

Massachusetts: January 23 at 7pm ET

Michigan: January 23 at 7pm ET

Minnesota: March 14 at 7pm CT

Mississippi: January 9 at 5pm CT

Missouri: January 10 at 7pm CT

Montana: No speech in even-numbered years

Nebraska: January 10 at 10am CT

Nevada: No speech in even-numbered years

New Hampshire: February 15 at 10am ET

New Jersey: January 9 at 3pm ET (Christie State of the State); January 16 at 11am ET (Murphy inaugural); March 13 at 2pm (Murphy budget address)

New Mexico: January 16 at 1pm MT

New York: January 3 at 1pm ET (State of the State); January 16 at 1pm ET (budget address)

North Carolina: No speech in even-numbered years

North Dakota: January 23 at 10am CT

Ohio: March 6 at 7pm ET

Oklahoma: February 5 at 12:30pm CT

Oregon: February 5 at 9:30am PT

Pennsylvania: February 6 at 11:30am ET

Rhode Island: January 16 at 7pm ET

South Carolina: January 24 at 7pm ET

South Dakota: December 5 at 1pm CT (budget address); January 9 at 1pm CT (State of the State)

Tennessee: January 29 at 6pm CT

Texas: No speech in even-numbered years

Utah: January 24 at 6:30pm MT

Vermont: January 4 at 2pm ET (State of the State); January 23 at 1pm ET (budget address)

Virginia: January 10 at 6:30pm ET (McAuliffe State of the Commonwealth); January 13 at noon ET (Northam inaugural); January 15 at 7pm ET (Northam State of the Commonwealth)

Washington: January 9 at noon PT

West Virginia: January 10 at 7pm ET

Wisconsin: January 24 at 3pm CT

Wyoming: February 12 at 10am MT

National: January 30 at 9pm ET

Alabama: January 9 at 6:30pm CT

Alaska: January 18 at 7pm AKT

Arizona: January 8 at 2pm MT

Arkansas: No speech in even-numbered years

California: January 25 at 10am PT

Colorado: January 11 at 11am MT

Connecticut: February 7 at noon ET

Delaware: January 18 at 2pm ET (State of the State); January 25 at 1pm ET (budget address)

Florida: January 9 at 11am ET

Georgia: January 11 at 11am ET

Hawaii: January 22 at 10am HAT

Idaho: January 8 at 1pm MT

Illinois: January 31 at noon CT (State of the State); February 14 at noon CT (budget address)

Indiana: January 9 at 7pm ET

Iowa: January 9 at 10am CT

Kansas: January 9 at 5pm CT (Brownback State of the State); January 31 at 3pm CT (Colyer inaugural); February 7 at 3pm CT (Colyer joint address)

Kentucky: January 16 at 7pm ET

Louisiana: February 19 at 5pm CT (special-session address); March 12 at 1pm CT (State of the State)

Maine: February 13 at 7pm ET

Maryland: January 31 at noon ET

Massachusetts: January 23 at 7pm ET

Michigan: January 23 at 7pm ET

Minnesota: March 14 at 7pm CT

Mississippi: January 9 at 5pm CT

Missouri: January 10 at 7pm CT

Montana: No speech in even-numbered years

Nebraska: January 10 at 10am CT

Nevada: No speech in even-numbered years

New Hampshire: February 15 at 10am ET

New Jersey: January 9 at 3pm ET (Christie State of the State); January 16 at 11am ET (Murphy inaugural); March 13 at 2pm (Murphy budget address)

New Mexico: January 16 at 1pm MT

New York: January 3 at 1pm ET (State of the State); January 16 at 1pm ET (budget address)

North Carolina: No speech in even-numbered years

North Dakota: January 23 at 10am CT

Ohio: March 6 at 7pm ET

Oklahoma: February 5 at 12:30pm CT

Oregon: February 5 at 9:30am PT

Pennsylvania: February 6 at 11:30am ET

Rhode Island: January 16 at 7pm ET

South Carolina: January 24 at 7pm ET

South Dakota: December 5 at 1pm CT (budget address); January 9 at 1pm CT (State of the State)

Tennessee: January 29 at 6pm CT

Texas: No speech in even-numbered years

Utah: January 24 at 6:30pm MT

Vermont: January 4 at 2pm ET (State of the State); January 23 at 1pm ET (budget address)

Virginia: January 10 at 6:30pm ET (McAuliffe State of the Commonwealth); January 13 at noon ET (Northam inaugural); January 15 at 7pm ET (Northam State of the Commonwealth)

Washington: January 9 at noon PT

West Virginia: January 10 at 7pm ET

Wisconsin: January 24 at 3pm CT

Wyoming: February 12 at 10am MT

National: January 30 at 9pm ET

Sunday, December 31, 2017

Follow Every Election in 2018 with This Calendar

In the year that is now almost complete, people took a practically unprecedented interest in politics. Granted, it was generally concentrated on one side of the political spectrum, but in 2017 people demonstrated in our nation's capital, called their congressmen and congresswomen, and voted. Boy, did they vote. Turnout in inconveniently timed special elections for GA-06 and the U.S. Senate seat in Alabama surpassed that of even regularly scheduled midterm elections. And people—again, mainly on the left—who had never before felt a stake in their local races began religiously following legislative election results—even in districts all the way across the country.

This was a welcome development to me as a charter member of Election Night Twitter. I've always enjoyed following minor special or local election results as idle entertainment on a Tuesday night, the same as I might sit down to watch a random A's-Twins game when my teams have an off day, but this year I was joined by so many engaged netizens eager to see "the Resistance" strike its next blow. Suddenly, it wasn't idle entertainment anymore; every week's elections became appointment viewing.

To keep to those appointments, I found I needed a calendar—so I started one. To my knowledge, no one has tried to create a comprehensive schedule of obscure elections before. Each state's election office has a listing of upcoming elections, but you have to visit 50 different websites to find them all. Fellow psephology nerds like Daily Kos Elections and Ballotpedia—both of whom I am indebted to in the compilation of my own calendar—have admirably assembled calendars of different types of elections but haven't taken the final, ultimate step.

So I present to you, election-obsessed people of the internet, this Google Calendar for all to view. My calendar will track every federal, state, and local* election in the country from January 1, 2018, all the way through the midterm general election—and beyond. If you find that I'm missing any, please let me know on Twitter. Enjoy!

*In localities of significant size; I draw the line at the Union City, Pennsylvania, school board.

This was a welcome development to me as a charter member of Election Night Twitter. I've always enjoyed following minor special or local election results as idle entertainment on a Tuesday night, the same as I might sit down to watch a random A's-Twins game when my teams have an off day, but this year I was joined by so many engaged netizens eager to see "the Resistance" strike its next blow. Suddenly, it wasn't idle entertainment anymore; every week's elections became appointment viewing.

To keep to those appointments, I found I needed a calendar—so I started one. To my knowledge, no one has tried to create a comprehensive schedule of obscure elections before. Each state's election office has a listing of upcoming elections, but you have to visit 50 different websites to find them all. Fellow psephology nerds like Daily Kos Elections and Ballotpedia—both of whom I am indebted to in the compilation of my own calendar—have admirably assembled calendars of different types of elections but haven't taken the final, ultimate step.

So I present to you, election-obsessed people of the internet, this Google Calendar for all to view. My calendar will track every federal, state, and local* election in the country from January 1, 2018, all the way through the midterm general election—and beyond. If you find that I'm missing any, please let me know on Twitter. Enjoy!

*In localities of significant size; I draw the line at the Union City, Pennsylvania, school board.

Subscribe to:

Posts (Atom)